- June 22 2020

Shopping Search Engines: Applying Computer Vision in Retail

Facebook CEO, Mark Zuckerberg, recently announced the launch of their new shopping initiative, Facebook Shops. He also talked about the advanced AI technology that powers their new shopping experiences (link).

ViSenze is one of the pioneers applying computer vision in retail for shopping search engines, and we are very excited to see another large scale adoption of computer vision technology in real life and also in use cases like visual search and image tagging. Computer vision is fast becoming a mainstream technology used across retail and online shopping. So what is it about computer vision and its ability to truly open up the windows of shopping?

Technology behind Visenze visual shopping search engine

ViSenze’ snap and search technology for shopping search engines enables customers to snap a photo and find products from more than 1000 ecommerce sites globally. The technology has already been integrated with most of the major mobile OEMs in the world. Presently, more than 200 million activated mobile users worldwide are using ViSenze Shopping, ViSenze’s snap and search feature embedded into the native camera lens of smartphones of Huawei, Samsung, Vivo, Oppo and LG daily. This shortens the path of conversion by instantly bringing shoppers to the most relevant products.

With years of evolution, we have developed deeper technologies to enable product understanding and recognition, and address the inherent challenges of the search and discovery in marketplace:

- One of the challenges to satisfy the wide plethora of preferences of different user segments is to ensure that our product coverage enables a good discovery experience. We have been using various approaches to surface diverse options of merchants and analyzing the user search history to improve the coverage. In terms of the catalog richness, we have the widest coverage in the market when it comes to product attributions. In addition to Fashion, Home and Vehicles (which Facebook also covers), we also cover Groceries, Digital Devices, Appliances to name a few.

- Since there are many different categories of products in the marketplace, it is naturally difficult to have one computer vision model to achieve the optimum performance of all the categories listed above. For such breadth of coverage, we have created multiple models to support the need. We improved the model architecture to ensure balanced accuracy performance across categories. Like Facebook’s ‘GrokNet’ model framework mentioned in their latest publication, we adopted the one unified model approach, which incorporates different loss functions and optimize for exact product recognition accuracy and category relevance, to achieve good performance across different categories.

- Another common issue of running a large marketplace is the taxonomy unification. Mapping various products from different e-commerce sites to a unified and standard taxonomy can enable better browse, search and discovery experience. We have developed a hybrid text-based classification and visual classification approach which considers both the existing product metadata and image content to boost performance.

The new wave of the Innovation for visual shopping

The possibilities are endless, and we have also worked on a few new innovative approaches to enhance the visual shopping experience.

Personalized Visual Shopping Experience

A superior AI shopping assistant not only brings the right product to you, but also provides the right size recommendation based on your body type. This led us to partner with Pixibo to offer the industry’s first Smart Product Recommendation and Sizing Solution.

Having launched on both Zalora, Asia’s leading online fashion destination and MAPemall, Indonesia’s leading lifestyle retailer and ecommerce marketplace, consumers are able to visually search for products they are inspired to purchase from various global brands such as Agnes B, Michael Kors, Calvin Klein, Lacoste, Nautica, Steve Madden, Reebok, Adidas, Sephora, and more, while also being shown similar product recommendations based on their size and fit preferences.

Use Edge Computing to Provide a Smoother hybrid product recognition.

Our edge computing ability opens up more interactive visual shopping experience, yet with better personal data security. With pure cloud deployment, data transition between devices and cloud takes time, making high speed interactions challenging. To enable interactive user product scanning and real-time recognition experience, we took a new approach adapting our deep learning models to device hardware rather than over optimising for cloud speed.

With pure edge computing or a hybrid approach, brands and retailers can empower brand new in-store shopping experiences. Having shoppers simply scan the product of interest with their phone, they can check the latest product details, and order for their size to try on, or even directly purchase without having a shop assistant around. Our in-device scanning ability could also be adopted by other mobile applications to enable the AR experience.

Fashion Attributes Recognition.

In order to build effective user experiences and ensure that the product range is discoverable by consumers, it is essential to have a detailed attribution of products. We have leveraged deep domain expertise to build a comprehensive taxonomy of fashion products and with our computer vision technology. We are able to recognise 70 different fashion categories and over 200 attributes for those categories which retailers can leverage to increase the relevance of their range, ensure a superior product discovery experience and build personalised campaigns, targeting consumers with relevant products that match their needs.

Visual shopping + 3D experiences to achieve a more engaging product browsing experience on ecommerce sites or app. With increasing investments in Augmented Reality (AR) in the last few years, AR has sparked renewed interest from big brands, and is even more relevant for retailers today arising Covid-19 impact. AR allows for brands to offers unique and immersive digital experiences to engage customers, and if they choose, without having to leave the confines of their house. One of the interesting applications that ViSenze has developed is to combine both live visual search on the smartphone camera to trigger AR based 3D effects of products in our seamless experience, letting consumers virtually sample the product from where they are.

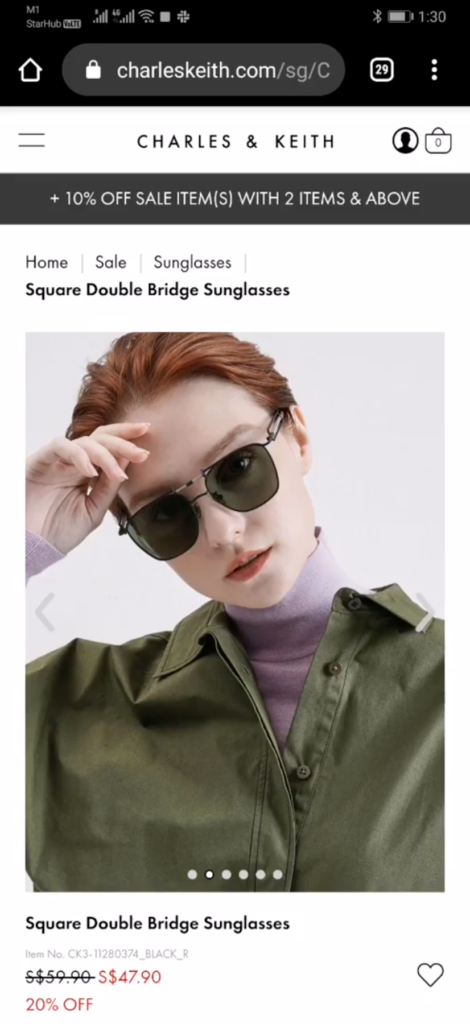

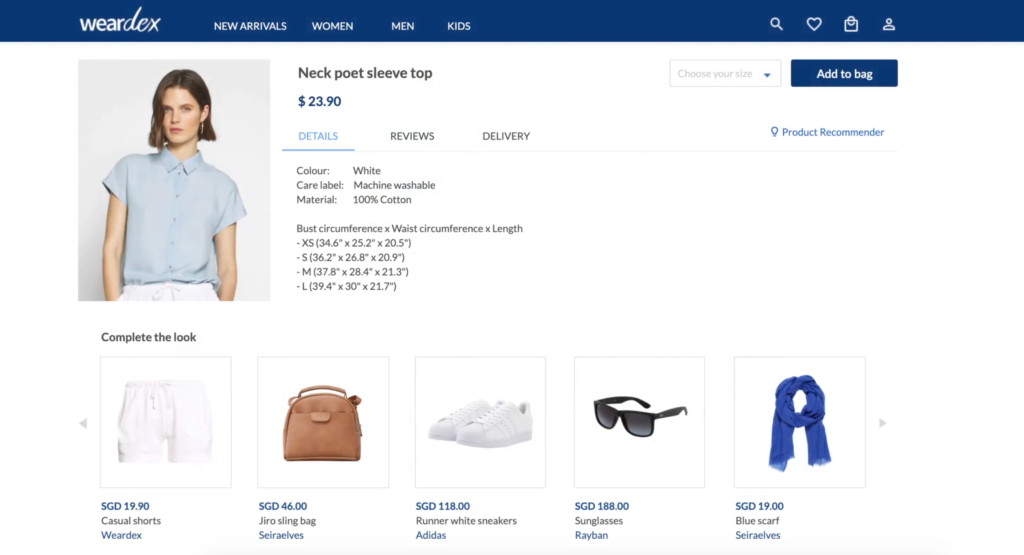

Complementary Products Recommendation. Closet recommendation for an interactive online mix and match experience. Learning from the latest trends, fashion stylists and social media influencers, our AI assistant helps ordinary people improve their fashion styling taste by automatically picking up apparels, fashion accessories which go well with their own closet. Our technology takes into consideration inspiration, diversity and gamification, not only consumers will enjoy the shopping and matching journey at home, but also retailers and ecommerces can utilise this idea to solve shoppers’ concerns about how to wear their newly purchased items.

Challenges and Ways to build robust AI solutions

Current AI models can only achieve acceptable accuracy with defined training data collected. There are a few operational challenges to develop a robust AI solution and we have developed processes and tools to tackle the challenge.

Deep Learning Model: No one-size-fits-all

Different user cases will result in different problem definitions, data acceptance or even different image types. In retail operation, the use cases are arranged from end user shopping experiences, supply chain, product management to retail analytics and so on. In order to keep adaptability and optimise performance for each, we have continuously improved the following areas:

- Built robust machine learning workflow and internal toolings to facilitate the fast moving dynamics.

- A customer obsessed R&D and service team to sit with every customer to co-define the solutions.

Domain Knowledge is Essential But Difficult to Transfer

A domain expert is able to recognize hundreds of small yet distinguishable clues to identify objects or attributes. This comes from years’ of training and experience. To train an AI model to do similar tasks, we have to transfer these knowledge to data requirement and standard in a continuous way:

- Build an in-house domain expert team to work with the R&D team on a daily basis. While they impart knowledge to the team, the team also influences them in articulating in a technical way.

- The best practices are built into standard workflows to transfer external knowledge effectively as well.

Production latency and scalability issue.

In the experimental setup, usually more complicated deep learning networks result in better recognition accuracy but consume more computing resources and higher latency as well. In a production environment, there could be a lot of the constraint in the real world from hardware, cost and also latency.

ViSenze has deep experience in maintaining low latency, high service availability on top of performing excellent accuracy services with global multi-data centers deployment. This is the result of years of investment in continuous algorithm and production infrastructure optimisation.

It is THE time to bring AI to your customers’ shopping experience

As mentioned here (link), Facebook has released a few AI abilities to enhance the shopping experience. However, true to it being a Walled Garden, these abilities are proprietary to Facebook Shopping and not for other companies to use.

As a leading visual recognition company in retail and ecommerce, most of the technologies powering ViSenze’s visual shopping search engine are commercially available. As a retailer or e-commerce, you can immediately arm yourself with the same level of technology and user experience such as Facebook shopping to bring your customer experience to the next level. Here is a list of solutions ViSenze has already brought to the market as a commercial service.

| ViSenze | ||

| Mobile visual search(cloud, hybrid) | Y, ready to integrate | Y, but proprietary |

| visually similar product recommendations | Y, ready to integrate | Y, but proprietary |

| product visual attribute tagging | Y, ready to integrate | Y, but proprietary |

| exact product identification | Y, ready to integrate | Y, but proprietary |

| product cataloging | Y, open for integration | Y, but proprietary |

All the above ViSenze solutions are accessible by simply plugging in via an API or SDK. We also provide a zero barrier mobile friendly web widget with Visenze best practices integrated (link) .

We are thriving to work with our retail and ecommerce partners to bring “Visual” together with “Shopping”. There could be touchpoints for new shopper acquisition, build a bridge to acquire shoppers from content communities or various browsing experiences into shopping experiences. We always work with our partner to analyze the data points related to visual shopping and the ROI of adopting our solutions together and provide actionable insights.

We would love to hear from you to understand more about your business problem and recommend the right solution to be built.

FAQ’s

Question:- How does edge computing work?

Answer:- As a distributed computing framework, edge computing focuses on bringing computing closer to the data sources. By this, it reduces bandwidth use and latency. Edge computing enables data processing at more significant volumes and speeds, thus delivering improved response times, faster insights and increased results.

Question:- How do we define a deep learning model?

Answer:- Deep learning is a machine learning model which teaches computers to mimic the human brain. The computer learns classification tasks by example, i.e., directly from images, sound, and text. Enabling systems to group data, deep learning models can achieve remarkable accuracy, often exceeding human levels.

Question:- What are Facebook Shops?

Answer:- Facebook shops is an e-commerce platform that enables retailers to sell their products on Facebook and Instagram. It is a free tool available to businesses to create online storefronts, making the process of going digital easier than before. Entirely powered by AI and machine learning systems, it acts as an assistant that searches and ranks products while customizing product recommendations to the personal tastes of its users.